By: Ken Adams

The Problem

I recently ran across an issue on a project that required a large amount of data to be removed from a table, specifically an issue with the loading of the table causing duplication of data.

All non-clustered indexes had been removed before the data load to speed loading. This included a unique index, so therefore we ended up with duplicates. The data loading portion of this could be a blog post for another day.

This table was too large ( > 10 billion rows) to flush the data and refill given the timeframe. The alternative was to try and remove any duplicate records. An example of this might be data archival. In this instance, you’d want to physically delete data that was older and possibly for a specific customer or group of customers.

In our situation, the table was partitioned. We were in a production environment and had to be very cognizant of competing resources. The primary concern was making sure the transaction log didn’t blow out the storage drive.

This can happen when you perform large delete operations in a single transaction. The answer to this problem was removing the data in smaller chunks, or batches, to lessen the impact on the transaction log.

Setup

For the purposes of this post, I used a copy of the AdventureWorksDW database which you can find here. I’m running the scripts on a fairly small Oracle VirtualBox machine with SQL Server 2019 installed.

The AdventureWorksDW that I had was an older backup, but I did change the compatibility level to 150 before running anything. This really shouldn’t matter but thought I’d point it out. The database was also set to simple recovery mode.

I chose one of the larger fact tables to use as my subject. The table is FactResellerSales. The table that came with the database had around 60K rows and that was not enough. I set up a new table and partitioned that table by year on the OrderDate.

To grow the data, I ran the following script below. This script loads FactResellerSales into the new partitioned and then executes 25 inserts of the same data randomized:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 |

TRUNCATE TABLE dbo.FactResellerSalesPartitioned; --initial insert INSERT INTO dbo.FactResellerSalesPartitioned ( ProductKey, OrderDateKey, DueDateKey, ShipDateKey, ResellerKey, EmployeeKey, PromotionKey, CurrencyKey, SalesTerritoryKey, SalesOrderNumber, SalesOrderLineNumber, RevisionNumber, OrderQuantity, UnitPrice , ExtendedAmount, UnitPriceDiscountPct, DiscountAmount, ProductStandardCost, TotalProductCost, SalesAmount, TaxAmt, Freight, CarrierTrackingNumber, CustomerPONumber, OrderDate , DueDate, ShipDate ) SELECT ProductKey, OrderDateKey, DueDateKey, ShipDateKey, ResellerKey, EmployeeKey, PromotionKey, CurrencyKey, SalesTerritoryKey, SalesOrderNumber, SalesOrderLineNumber, RevisionNumber, OrderQuantity, UnitPrice , ExtendedAmount, UnitPriceDiscountPct, DiscountAmount, ProductStandardCost, TotalProductCost, SalesAmount, TaxAmt, Freight, CarrierTrackingNumber, CustomerPONumber, OrderDate , DueDate, ShipDate FROM dbo.FactResellerSales WITH (HOLDLOCK TABLOCKX) ; GO --now multiple inserts of randomized rows (based on work by Jonathan M. Kehayias - https://github.com/ktaranov/sqlserver-kit/blob/master/Sample_Databases/Create_Enlarged_Adventureworks_Tables.sql) INSERT INTO dbo.FactResellerSalesPartitioned ( ProductKey, OrderDateKey, DueDateKey, ShipDateKey, ResellerKey, EmployeeKey, PromotionKey, CurrencyKey, SalesTerritoryKey, SalesOrderNumber, SalesOrderLineNumber, RevisionNumber, OrderQuantity, UnitPrice , ExtendedAmount, UnitPriceDiscountPct, DiscountAmount, ProductStandardCost, TotalProductCost, SalesAmount, TaxAmt, Freight, CarrierTrackingNumber, CustomerPONumber, OrderDate , DueDate, ShipDate ) SELECT ProductKey, OrderDateKey, DueDateKey, ShipDateKey, ResellerKey, EmployeeKey, PromotionKey, CurrencyKey, SalesTerritoryKey, SalesOrderNumber, SalesOrderLineNumber, RevisionNumber, OrderQuantity, UnitPrice , ExtendedAmount, UnitPriceDiscountPct, DiscountAmount, ProductStandardCost, TotalProductCost, SalesAmount, TaxAmt, Freight, CarrierTrackingNumber, CustomerPONumber, DATEADD(dd, number, OrderDate) AS OrderDate , DATEADD(dd, number, DueDate) AS DueDate, DATEADD(dd, number, ShipDate) AS ShipDate FROM dbo.FactResellerSales AS soh WITH (HOLDLOCK TABLOCKX) CROSS JOIN ( SELECT number FROM ( SELECT TOP 10 number FROM master.dbo.spt_values WHERE type = N'P' ORDER BY NEWID() DESC UNION SELECT TOP 10 number FROM master.dbo.spt_values WHERE type = N'P' ORDER BY NEWID() DESC UNION SELECT TOP 10 number FROM master.dbo.spt_values WHERE type = N'P' ORDER BY NEWID() DESC UNION SELECT TOP 10 number FROM master.dbo.spt_values WHERE type = N'P' ORDER BY NEWID() DESC ) AS tab ) AS Randomizer ORDER BY OrderDate, number GO 20 |

Then I ran the delete script, which is made up of 3 primary parts: the identification of the partitions, creation of an outer cursor to loop through those partitions, and an inner delete filtered on the partition date range.

The inner delete filtered on a handful of ProductKeys, which I chose at random. Adding the ProductKey filter more closely mimicked the scenario I encountered at the client site.

Identify the Partitions:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

--get partitions and load into temp table DROP TABLE IF EXISTS #PartitionBoundaries; SELECT CAST(p.partition_number AS BIGINT) AS [Partition] , CASE p.partition_number WHEN 1 THEN '1900-01-01 00:00:00.000' ELSE NULL END AS LowerBound , CASE WHEN prv.value IS NULL THEN '2079-06-06 00:00:00.000' ELSE CAST(prv.value AS DATETIME) END AS UpperBound , prv.value AS PartitionBoundary , stat.row_count AS RowCnt INTO #PartitionBoundaries FROM sys.tables t INNER JOIN sys.schemas s ON s.schema_id = t.schema_id INNER JOIN sys.partitions p ON t.object_id = p.object_id AND p.index_id = 1 INNER JOIN sys.indexes i ON p.object_id = i.object_id AND p.index_id = i.index_id INNER JOIN sys.data_spaces ds ON i.data_space_id = ds.data_space_id INNER JOIN sys.partition_schemes ps ON ds.data_space_id = ps.data_space_id INNER JOIN sys.partition_functions pf ON ps.function_id = pf.function_id LEFT JOIN sys.partition_range_values prv ON pf.function_id = prv.function_id AND p.partition_number = prv.boundary_id LEFT JOIN sys.dm_db_partition_stats stat ON p.partition_id = stat.partition_id WHERE 1 = 1 AND is_ms_shipped = 0 AND EXISTS ( SELECT 1 FROM sys.partitions p2 WHERE t.object_id = p2.object_id AND partition_number > 1 ) AND t.name = 'FactResellerSalesPartitioned' AND s.name = 'dbo' ; |

Declare a Partition Cursor:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

--declare outer cursor for partitions DECLARE PartitionCursor CURSOR STATIC FOR --put them in an outer cursor SELECT t1.PARTITION AS PartitionNumber , ISNULL(CAST(t1.LowerBound AS DATETIME), CAST(t2.PartitionBoundary AS DATETIME)) AS LowerBound , CAST(t1.UpperBound AS DATETIME) AS UpperBound FROM #PartitionBoundaries t1 LEFT JOIN #PartitionBoundaries t2 ON t1.PARTITION = t2.PARTITION + 1 WHERE t1.RowCnt > 0 --narrowed down to partitions with data ORDER BY 1 ; OPEN PartitionCursor; FETCH NEXT FROM PartitionCursor INTO @PartitionNumber, @LowerBound, @UpperBound; WHILE @@FETCH_STATUS = 0 BEGIN --inner DELETE is here --grab the next partition for processing FETCH NEXT FROM PartitionCursor INTO @PartitionNumber, @LowerBound, @UpperBound; END --close and deallocate the cursor once all batches for a given table have been processed CLOSE PartitionCursor; DEALLOCATE PartitionCursor; |

Inner DELETE With Very Simple Logging and Error Handling:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 |

--use an inner loop to batch DELETE the rows within a partition SET @Rows = 1; WHILE @Rows > 0 BEGIN BEGIN TRY --start a log record INSERT INTO dbo.Delete_Log(PartitionNumber, LowerBound, UpperBound, StartDateTime) SELECT @PartitionNumber, @LowerBound, @UpperBound, GETDATE() ; SET @RowID = SCOPE_IDENTITY() DELETE TOP (500000) --this number can be changed FROM dbo.FactResellerSalesPartitioned WHERE OrderDate >= @LowerBound AND OrderDate < @UpperBound AND ProductKey IN --selected list of products ( 471 , 458 , 221 , 234 , 222 ) SET @Rows = @@ROWCOUNT; --after deleting batch then update the ViewAlertTrackingRecipient_Delete_Log table UPDATE dbo.Delete_Log SET EndDateTime = GETDATE() , RowCnt = @Rows WHERE PartitionNumber = @PartitionNumber AND RowID = @RowID ; END TRY BEGIN CATCH DECLARE @Message VARCHAR(4000) , @Severity INT , @State INT; SELECT @Message = error_message() , @Severity = error_severity() , @State = error_state(); RAISERROR ( @Message , @Severity , @State ); RETURN; END CATCH; END |

Execution

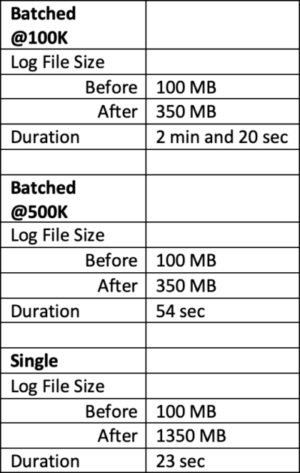

The size of the FactResellerSales after the INSERT script was around 60 million records. With the table in place, I also shrank the transaction log to reset the size. The goal is to measure the impact of a single transaction versus a batched delete. I also captured the run time of each. The results are below:

So what does it all mean? Well, the delete in a single transaction took half the time of the 500K batched delete and 1/6 of the time 100K batched delete. That is not insignificant, although the goal was not geared toward performance.

In our situation, we were seeking the option that minimized impact on the transaction log in the most performant manner possible. You’ll note that the transaction log impact was fairly significant for the single transaction delete – it was about 4 times greater versus both batched deletes. While this might not scale linearly, it would be very significant when you’re talking about a table that is billions of rows.

The impact of the table being partitioned means that less data is scanned for each delete, which should help the performance of the batched delete. You do need to make sure that the data type of the filter and partition column match – see below (OrderDate is a DATETIME):

|

1 2 3 4 5 6 7 8 |

DECLARE @Rows AS INT ,@PartitionNumber INT ,@LowerBound DATETIME ,@UpperBound DATETIME ,@RowID SMALLINT; WHERE OrderDate >= @LowerBound AND OrderDate < @UpperBound |

Conclusion

While you might look at the performance in the example above and jump all over that, be sure to consider the impact on your SQL Server environment. As mentioned, this was key in our case.

We could not risk a huge impact on storage due to a growing transaction log on a server where there were ongoing ETL processes. We chose to batch but also used the existing partitions to help minimize the amount of data being scanned. In regards to the appropriate batch size, you will need to do some experimenting.

Is this a scenario you have run across at your job? How did you handle it? If it is something you have struggled with or have questions about let us know. Also, feel free to speak up in the comments.

Thanks for Reading! Questions?

Thanks for reading! We hope you found this blog post useful. Feel free to let us know if you have any questions about this article by simply leaving a comment below. We will reply as quickly as we can.

Keep Your Data Analytics Knowledge Sharp

Get fresh Key2 content and more delivered right to your inbox!

About Us

Key2 Consulting is a boutique data analytics consultancy that helps business leaders make better business decisions. We are a Microsoft Gold-Certified Partner and are located in Atlanta, Georgia. Learn more here.