Expert Azure Data Factory Consulting Services

Azure Data Factory can be an invaluable tool for your organization when optimized. We can help you do so with our Azure Data Factory consulting services and solutions.

What is Azure Data Factory?

Azure Data Factory (ADF) is a cloud-based data integration service that enables organizations to perform big data acquisition and enrichment at scale from disparate sources that exist on-prem or in the cloud.

It’s impressive that ADF is able to achieve this given that the service does not store data, nor does it have an underlying integration engine.

So how does it do it?

ADF orchestrates data movement and enrichment by leveraging big data compute services (like Apache Spark) and other cloud-based resources. It features built-in connectors to dozens of on-prem data sources (Oracle, SQL Server, FTP, File Share, SharePoint to name a few), and big cloud platforms, including Salesforce, SAP, and ServiceNow.

In short, you instruct ADF what to do and then it instructs the cloud-based resources to perform the heavy lifting.

Who is Azure Data Factory designed for?

Azure Data Factory is a great technology for big organizations with large volumes of data that require a data lake to support analytics and machine learning. It is also great for companies of any size that are looking for a low-code tool that can be stood up quickly to address pressing integration needs.

How We Can Help You Create Dynamic and Data-Driven Pipelines

Whether your data source comprises hundreds of structured database tables or thousands of semi-structured JSON files (or both), Key2 Consulting can help your organization develop generic and reusable ADF pipelines that are driven by metadata and parameters rather than fixed configurations. There is no need to hardcode a specific table name or file share location anywhere in a pipeline.

For example, a single parent-child pipeline combination can be developed once and reused to process data from dozens of SQL Server tables in parallel, from any SQL Server database. These pipelines can also be configured to infer schemas and allow for schema growth, eliminating the need to update the pipeline as your source systems evolve.

Azure Data Factory Consulting To Help You Maximize Your Data Integration Efforts

Whether you are looking to stand up a traditional data lake or implement a lakehouse with a medallion architecture, our company can help you design and build an Azure Data Factory solution that meets your organization’s specific data quality, performance, security, and budgetary requirements.

ADF’s low code and intuitive drag-drop interface allows data engineers to easily transition from a legacy integration tool. ADF also helps avoid the challenges associated with supporting a multitude of custom transformation scripts that may be written in one or more programming languages.

If your organization is currently using SQL Server Integration Services (SSIS) and is exploring the option of transitioning to ADF or migrating your SSIS workload into Azure, we can help.

Ready to maximize Azure Data Factory? Contact us today.

Our company is a Microsoft Gold-Certified Partner with years of Azure consulting experience. We can help you get the most out of ADF.

Some of Our Azure Content

Creating Folders in Power BI Workspaces

Creating folders in Power BI is a new capability recently announced by Microsoft. In this blog, we explore the benefits of Power BI folders and how to create them.

Exploring Our End-to-End Custom Azure Solution – Part 2

Here’s Part 2 of our series on our custom end-to-end Azure solution. Our solution involves various Azure products, Power BI, and more.

Understanding Power BI Filters, Slicers, & Highlighting

We explore three highly useful Power BI features that enhance data analysis: highlighting, filtering, and slicing.

How to Connect Power BI to Serverless Azure Synapse Analytics

Here’s a solution we created for accessing Azure Data Lake Storage Gen 2 data from Power BI using Azure Synapse Analytics.

How to Use Azure AI Language for Sentiment Analysis

Learn about sentiment analysis in Azure, including how to leverage Azure AI Language services to conduct it.

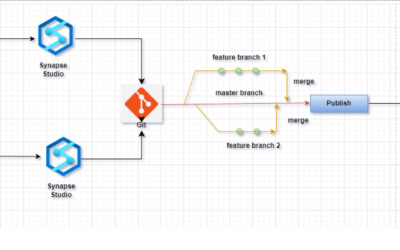

GitHub Source Control Integration with Azure Synapse Workspace

GitHub source control integration with Azure Synapse workspace allows data professionals to manage scripts, notebooks, and pipelines in a version-controlled environment.

Key2 Consulting | [email protected] | (678) 835-8539