By: Brad Harris

The Problem

Over the past couple of months, I have been working with Azure Data Factory (ADF) in a project where we are migrating all of our on-prem data to the cloud using a combination of Azure Data Factory and Azure Databricks.

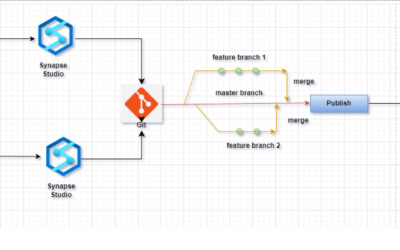

Like all solutions, we have evolved our project from simple pipelines that perform a certain task to more of a solution that can be packaged up and deployed to multiple environments, say a Dev -> PreProd -> Prod-type implementation.

This type of implementation involved a considerable number of different parts and the deployment and implementation got more complicated as time went on. The complication of the solution limited our ability to perform manual deployments to each of our environments, so we as a team started going down the path of continuous integration and automated deployments.

We started down this road using GitHub actions that would deploy ADF pipelines, Databricks notebooks, and Azure SQL databases to our specific environments based on parameters we defined within .yml files used in GitHub actions.

While this process worked out great, we were always looking for better ways to catch errors and streamline the deployment process.

One of the items we identified as needing streamlining was the testing of Azure Data Factory linked services before and after items were deployed to specific environments. Testing would prevent items from being deployed to a specific environment that might have a wrong setting, causing downstream pipelines that depend on these linked services to fail when ran after deployment.

The Solution – Use PowerShell to Test Azure Data Factory

We set out to find a solution that would allow us to test Azure Data Factory linked services. While there was not a lot of documentation out there for how this could be done, the team found a way.

Using Powershell, I was able to compose a script that would login to our ADF environment and list out the linked services. Then, that script would go through the process of testing the connectivity of each linked service, one by one. This testing would return a true or false for connectivity and would indicate any errors that needed to be corrected before deployment could continue.

The Script

I have to start by giving a big shout out to Simon D’Morias, as a lot of the leg work was already done with his base script. The base script can be found on GitHub here.

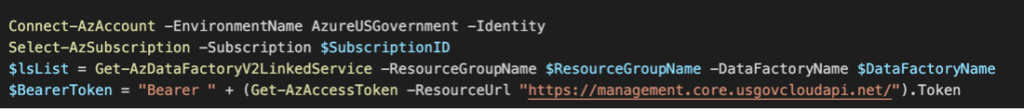

The base script was my starting point for what we needed to accomplish. Essentially, the script logs into your Azure subscription and then makes a REST API call to get a bearer token used in any further authentication attempts.

|

1 2 3 4 5 6 7 8 9 10 11 |

functiongetBearer([string]$TenantID,[string]$ClientID,[string]$ClientSecret) { $TokenEndpoint={https://login.windows.net/{0}/oauth2/token} -f$TenantID $ARMResource="https://management.core.windows.net/"; $Body=@{ 'resource'=$ARMResource 'client_id'=$ClientID 'grant_type'='client_credentials' 'client_secret'=$ClientSecret } |

The base script does what it is supposed to do, however we had a different route that we went down.

Because our GitHub runner had a managed identity with Azure, all we had to do to authenticate was to login to Azure with authentication set to “Managed Identity.”

From there, the script calls the Azure command of Get-AzAccessToken without making complex REST API calls. I mean, it’s REST API under the covers but the code is a lot cleaner.

Now that we have the bearer token, it will be used in further REST API calls to get a specific list of linked services. Then, those linked services are passed to a quasi-undocumented REST API call that performs the connectivity test of that linked service.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

Function getLinkedService([string]$LinkedService) { $ADFEndpoint = “https://management.usgovcloudapi.net/subscriptions/$SubscriptionId/resourceGroups/$ResourceGroupName/providers/Microsoft.DataFactory/factories/$DataFactoryName/linkedservices/$($LinkedService)?api-version=2018-06-01” $params = @{ ContentType = ‘application/json’ Headers = @{‘accept’=’application/json’;’Authorization’=$BearerToken} Method = ‘GET’ URI = $ADFEndpoint } $a = Invoke-RestMethod @params Return ConvertTo-Json -InputObject @{“linkedService” = $a} -Depth 10 } |

The following code represents the undocumented API to test the linked services. We found the code endpoint by using the developers tools in Edge, looking at the network API calls.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

function testLinkedServiceConnection($Body) { $AzureEndpoint = “https://management.usgovcloudapi.net/subscriptions/$SubscriptionID/resourcegroups/$ResourceGroupName/providers/Microsoft.DataFactory/factories/$DataFactoryName/testConnectivity?api-version=2018-06-01” $params = @{ ContentType = ‘application/json’ Headers = @{‘accept’=’application/json’;’Authorization’=$BearerToken} Body = $Body Method = ‘Post’ URI = $AzureEndpoint } $RestAPIResult = (Invoke-RestMethod @params).succeeded $RestAPIErrors = (Invoke-RestMethod @params).errors if (!$RestAPIErrors) { $Errors = “None” } else { $Errors = $RestAPIErrors[0].message } Return ($RestAPIResult,$Errors) } |

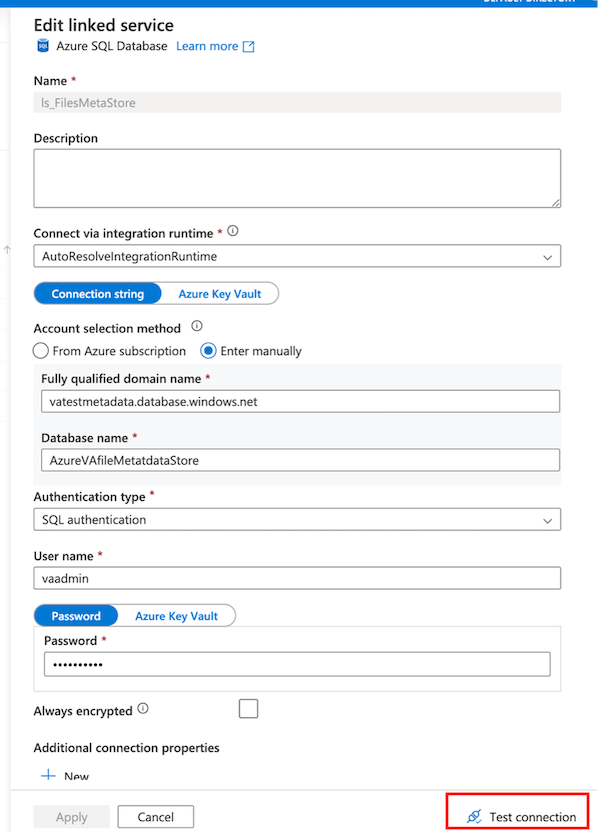

The above code performs that same kind of functionality similarly to how you manually test Azure Data Factory linked services during the setup in the Azure Data Factory UI, but in an automated way.

So, with a good script in hand that would successfully allow me to test Azure Data Factory linked services, I proceeded to add in a loop that would retrieve a list of linked services from my data factory and then loop through that list to test out each individual service.

|

1 2 3 4 5 6 7 8 9 10 |

Foreach($listItem in $lsList) { $LinkedServiceBody = getLinkedService $listItem.Name $LinkServiceResult = testLinkedServiceConnection $LinkedServiceBody $ResultOutput = $listItem.Name +”: Connection Result:” + $LinkServiceResult[0] + “ Errors:” + $LinkServiceResult[1] Write-Host $ResultOutput } |

The only thing that was left to do was to change it around so that I could use it in our CI/CD implementation. This wasn’t too difficult and only required me to change the script so that it could accept parameters for a config file that contained all of the environment settings for the particular environment that we were deploying to.

|

1 2 3 4 5 6 7 8 |

param( [String]$ConfigFile = ".\" ) $ConfigObject = Get-Content $ConfigFile | ConvertFrom-Json $ResourceGroupName = $ConfigObject.resource_group_name $DataFactoryName = $ConfigObject.adf.adf_factory_name $SubscriptionID =$ConfigObject.subscription_id |

After that, all that was needed was to call the PowerShell script from the GitHub action .yml file providing the config file path, and that’s all she wrote. Now, every time a pull request is done from development and committed (essentially a deployment), this check of linked services will run.

Conclusion

This was a particularly fun adventure and something that I had never done before. It was nice to know that we were able to further stabilize our CI/CD implementation with a good base script and a little bit more digging through REST API documentation.

If you find yourself in the same situation and need some help, we here at Key2 would be glad to assist you in your ventures.

Thanks for Reading! Questions?

Thanks for reading! We hope you found this blog post useful. Feel free to let us know if you have any questions about this article by simply leaving a comment below. We will reply as quickly as we can.

Keep Your Data Analytics Knowledge Sharp

Get fresh Key2 content and more delivered right to your inbox!